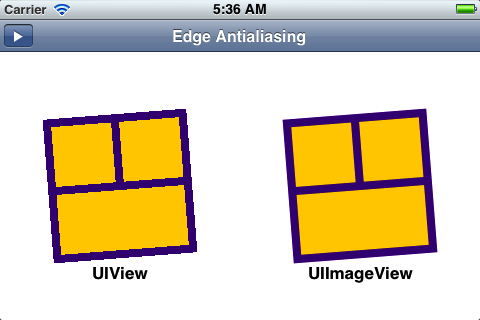

This morning while I was checking an app for misaligned elements, I happened upon a misaligned button. (If you’re not using either the iOS Simulator or Instruments to check your app for misaligned images, you should be, but that’s a post for another day.)

![]()

Checking the code it was obvious to me where the problem was.

backButton.frame = CGRectMake(5, (navigationBar.bounds.size.height

- imageBack.size.height)/2, imageBack.size.width,

imageBack.size.height);

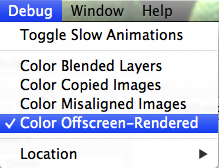

Centering code is especially prone to pixel misalignment. In this case imageBack has a size of (50, 29) while the navigationBar has a height of 44 points. The code above generates a rect with origin = (5, 7.5) and size = (50, 29). So the image ends up vertically misaligned, which in turn makes the child text label inside also misaligned, and hence they show up painted in magenta when the Color Misaligned Images option is checked in the iOS Simulator Debug menu.

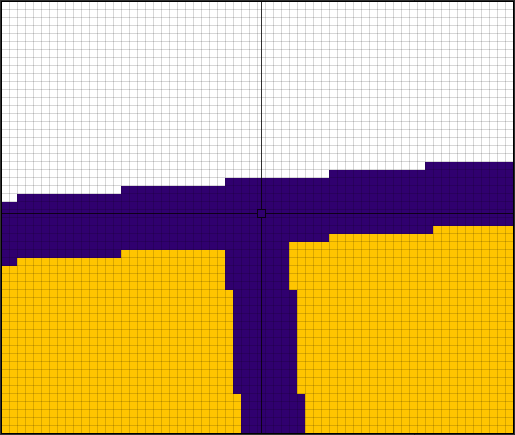

This looks like a job for CGRectIntegral, right? But when I change the code to this:

backButton.frame = CGRectIntegral(CGRectMake(5,

(navigationBar.bounds.size.height - imageBack.size.height)/2,

imageBack.size.width, imageBack.size.height));

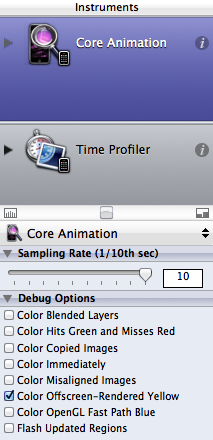

I end up with this:

![]()

The button is no longer misaligned, but it is now being stretched (hence the yellow wash). Debugging shows that CGRectIntegral has converted the input rect of (5, 7.5) x (50, 29) into (5, 7) x (50, 30). So now the image is being stretched vertically by 1 point. That might be fine for UILabel but not for an image.

The other issue with using CGRectIntegral is that the original rect is actually fine for retina devices because they have 2 pixels per point, so a value of 7.5 actually falls on a pixel boundary, and is the optimal centering for this image. If we adjusted it to origin.y = 7 (without stretching) then it would be 2 pixels closer to the top than to the bottom on a retina device.

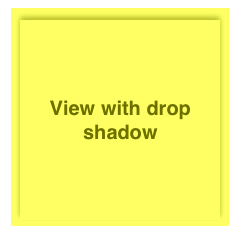

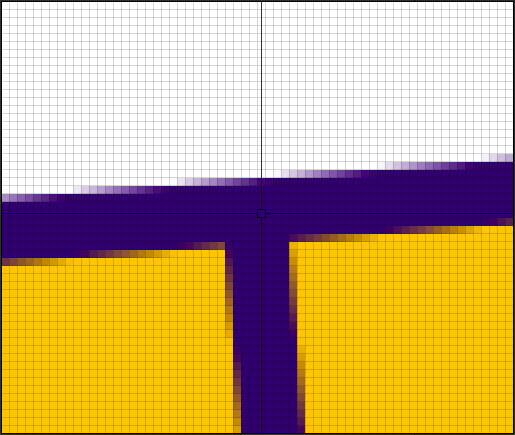

I’ve written some helper functions to correctly pixel align rectangles (not point align) for both retina and non-retina screens, and posted them in this gist.

Under non-retina it would convert the rectangle to (5, 7) x (50,29) to pixel align it without stretching, while under retina it would leave the rectangle unmodified at (5, 7.5) x (50, 29).

This finally clears the magenta (alignment) and yellow (stretch) washes from the button:

![]()

Addendum

According to the Apple Documentation for CGRectIntegral:

A rectangle with the smallest integer values for its origin and size that contains the source rectangle. That is, given a rectangle with fractional origin or size values, CGRectIntegral rounds the rectangle’s origin downward and its size upward to the nearest whole integers, such that the result contains the original rectangle.

The fractional origin of (5, 7.5) is rounded downward to (5, 7), but I initially thought the size would be left unmodified (not rounded up) because it already comprises 2 whole integers. But that wouldn’t contain the original rectangle, whose lower right corner is positioned at (55, 36.5). In order to contain the original rectangle, the height has to be increased by 1 point from 29 to 30.